One of the less glamorous but nice to have features in NSX 6.2.3 is the ability to trigger the failover of NSX Edge appliances. Being a SE, the most common use case for this that I have is during a proof of concept (POC) with a customer. Certainly there are many other use cases that range from testing and validating your setup for failover actually works, to DR plans to troubleshooting and the list goes on. I am sure you can think of others that might not have occurred to me.

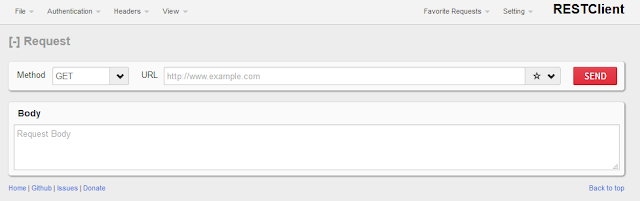

Today this ability requires the use of an API call to execute, which for me is good as I need to force myself to get more comfortable in the API world. What can I say - 21 years of CLI on Novell NetWare, IBM S390 and AS/400s and plenty of Cisco terminal time has ingrained habits that are hard to break. So how do I get started with APIs? Easy enough - search for "REST API plugin" and you'll find it. I am using "RESTClient" in FireFox and looks like this.

In my home network I have an Edge Services Gateway (ESG) that is configured in Active/Standby mode. You can figure out which one is active using the "show service highavailability" command from the CLI.

To set the HA admin state to down you must use a REST API that has details about the object you are working with. The specific API call is the "<haAdminState>up</haAdminState>" under the NSX Edge appliance section. In my environment I needed to first do a GET operation to see the rest of the variables I needed to complete the call were.

The response I got was all of this - where we see the cluster ID, host ID, VM ID and other attributes about the guest. Most importantly is the new haAdminState attribute which is up meaning our active/standby is working as expected.

<appliance>

<highAvailabilityIndex>1</highAvailabilityIndex>

<vcUuid>501bc163-173d-85eb-f8f5-a90eabc15595</vcUuid>

<vmId>vm-196</vmId>

<haAdminState>up</haAdminState>

<resourcePoolId>domain-c31</resourcePoolId>

<resourcePoolName>DC1-MgmtEdge</resourcePoolName>

<datastoreId>datastore-51</datastoreId>

<datastoreName>Synology</datastoreName>

<hostId>host-43</hostId>

<hostName>dc1-edge02.fuller.net</hostName>

<vmFolderId>group-v22</vmFolderId>

<vmFolderName>vm</vmFolderName>

<vmHostname>NSX-edge-2-1</vmHostname>

<vmName>dc1-edge-01-1</vmName>

<deployed>true</deployed>

<edgeId>edge-2</edgeId>

<configuredResourcePool>

<id>domain-c31</id>

<name>DC1-MgmtEdge</name>

<isValid>true</isValid>

</configuredResourcePool>

<configuredDataStore>

<id>datastore-51</id>

<name>Synology</name>

<isValid>true</isValid>

</configuredDataStore>

</appliance>

Now we have the details we need to change the state of our edge appliance. All we need to do is a PUT with the state reading down instead of up.

Now being a big fan of trust but verify, how do we see the change was made? Let's connect to the Edge appliance VM and take a look. We can see the status is now Standby and the timestamp when the state changed.

If we check out the now active Edge appliance we see what we'd expect to see - Active and a timestamp that is before the appliance that was active became standby.

Next, let's see what the logs look like when the PUT was executed. Below are the initial entries I see on what was the standby as it transitions to active. There are dozens of other messages associated with startup, but I wanted to capture the state change. Note the use of BiDirectional Forwarding Detection (BFD). BFD is one of the neater protocols out there, IMHO. I'll blog about it in the future but know that I think BFD is a Big Freaking Deal (BFD) - that's nerd humor for you. ;)

2016-07-05T13:46:02+00:00 NSX-edge-2-0 lcp-daemon: [daemon.notice] ovs|00079|lcp|INFO|status/get handler

2016-07-05T13:46:02+00:00 NSX-edge-2-0 lcp-daemon: [daemon.notice] ovs|00080|lcp|INFO|ha_node_is_active: id 0 status 1 active 0 (0)

2016-07-05T13:46:02+00:00 NSX-edge-2-0 lcp-daemon: [daemon.notice] ovs|00081|lcp|INFO|ha_node_is_active: id 1 status 1 active 1 (0x1)

2016-07-05T13:52:29+00:00 NSX-edge-2-0 bfd: [daemon.notice] ovs|00029|bfd_main|INFO|Send diag change notification to LCP

2016-07-05T13:52:29+00:00 NSX-edge-2-0 lcp-daemon: [daemon.notice] ovs|00082|lcp|INFO|Processing BFD notification

2016-07-05T13:52:29+00:00 NSX-edge-2-0 lcp-daemon: [daemon.notice] ovs|00083|lcp|INFO|BFD session for 169.254.1.5:169.254.1.6 state: Up (0) diag: Admin Down (7)

2016-07-05T13:52:29+00:00 NSX-edge-2-0 lcp-daemon: [daemon.notice] ovs|00084|lcp|INFO|ha_node_set_active: node 1 active 0

2016-07-05T13:52:29+00:00 NSX-edge-2-0 lcp-daemon: [daemon.notice] ovs|00085|lcp|INFO|Node 1 status changed from Up to Admin Down

2016-07-05T13:52:29+00:00 NSX-edge-2-0 lcp-daemon: [daemon.notice] ovs|00086|lcp|INFO|HA state Standby, processing event BFD State Updated reason Updated

2016-07-05T13:52:29+00:00 NSX-edge-2-0 lcp-daemon: [daemon.notice] ovs|00087|lcp|INFO|ha_node_is_active: id 1 status 0 active 0 (0)

This state change does persist across a reboot as you can see below.

A simple PUT operation with the admin state to UP is all that is needed to bring this appliance back into service as a standby. Pretty neat feature that was added in 6.2.3.

No comments:

Post a Comment